AI, Ethics & Wellbeing: Sharing LSST’s Voice at Oxford University

Article Date | 6 October, 2025By Dr Elaheh Barzegar, Graduate Trainee Lecturer, LSST Wembley

When Dreams Meet Research

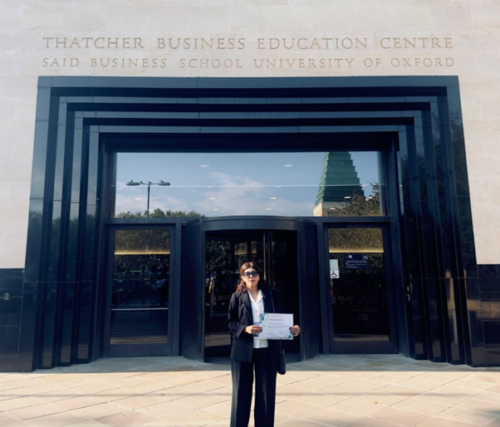

Dreams are not merely aspirations – they are guiding principles that shape our journeys, even when the path unfolds in unexpected ways. On 22 August 2025, I had the distinct privilege of presenting my research at the 9th International Conference on Modern Research in Education, Teaching, and Learning (ICMETL 2025), held at Oxford University’s Saïd Business School.

For me, this was more than a professional milestone. It was a moment that brought together years of research, international scholarly exchange, and a long-held dream of experiencing Oxford’s historic and intellectually vibrant environment.

Yet my presentation was not only about reaching Oxford. It was also about addressing one of the most pressing questions of our time: how do we balance the promise of artificial intelligence with the ethical and psychological wellbeing of students?

Presenting LSST Research on AI and Ethics

At Oxford, I presented findings from a study I conducted with undergraduate students across three UK universities. The research examined how students use AI tools such as ChatGPT and Grammarly, and what this means for their mental health and ethical behaviour.

The results were striking:

- Frequent AI use was linked to higher levels of cognitive strain and psychological distress. Rather than reducing pressure, heavy reliance on AI sometimes made students feel more overwhelmed or less confident in their own abilities.

- Cognitive load theory helped explain this paradox. By outsourcing too much thinking to AI, students risked disengaging from deep learning processes – leading to attentional lapses and reduced memory retention.

- The transactional model of stress further illustrated how AI can increase perceived pressure. Students often felt they were falling behind if they did not match their peers’ use of AI, creating new stressors instead of reducing them.

- Crucially, institutional guidance mattered. Students who reported clear policies and support around AI use were far more likely to engage ethically and responsibly. This confirmed the theory of planned behaviour: when universities set clear norms, students follow them.

Findings showed that frequent AI use is linked to increased cognitive strain and lower mental wellbeing. Students themselves expressed this tension:

- “I use AI almost every day for essays, but I feel like I’m losing my own voice. I worry I won’t be able to write without it anymore.”

- “It helps me keep up, but the pressure to always use it makes me anxious — like I’ll fall behind if I don’t.”

At the same time, the role of universities was clear:

- “When my lecturer explained what counts as ethical use, I felt more confident. Before that, I honestly didn’t know if I was cheating or not.”

These voices capture what the data revealed: AI is not just a technical tool, but something that profoundly shapes student confidence, stress, and sense of integrity.

Why This Matters – AI’s Double-Edged Role in Education

AI is often hailed as a revolution for higher education. It can:

- Speed up research and writing.

- Provide personalised feedback.

- Bridge language and accessibility barriers.

- Enable new forms of collaborative and creative work.

But as my research revealed, this efficiency comes at a potential cost. Students may experience:

- Cognitive dependency – losing confidence in their own ability to think critically.

- Stress and burnout – feeling pressure to keep up with digital tools.

- Ethical uncertainty – unclear on what constitutes acceptable or dishonest use.

One student put it simply: “AI saves me time, but sometimes it makes me doubt myself more than it helps.” This paradox reflects the heart of the challenge — efficiency without wellbeing is not true progress.

Participants of the ICMETL 2025 conference at Oxford University. Photo: LSST.Dialogue Across Borders

One of the most rewarding aspects of the Oxford conference was engaging with academics and practitioners from around the world. Their questions and perspectives reflected diverse cultural, institutional, and methodological approaches.

I was struck by how universal these concerns were. Whether in Europe, Asia, or the UK, students are wrestling with the same uncertainties. One delegate put it well: “The real question is not whether AI belongs in education, but whether education can guide AI responsibly.”

This global exchange reminded me of a vital truth: research gains depth and relevance through dialogue, critique, and collaboration. It is only when findings are tested against different lenses that their true significance emerges.

Walking Through History and Inspiration

Beyond the conference halls, Oxford itself was an inspiration. Accompanied by the conference team, I walked through centuries-old colleges, cobbled streets, and timeless courtyards. Every archway, library, and stone seemed to whisper stories of scholars who shaped knowledge across the centuries.

Being on Oxford’s grounds connected me to a larger purpose: humanity’s ongoing quest for understanding. In that environment, my research felt both relevant and part of a long tradition of questioning, exploring, and learning.

Lessons Learned – Research and Reflection

Reflecting on both the conference and my study, I carried away several lessons that speak to education, technology, and personal growth:

- The power of feedback – Genuine progress arises when we remain open to dialogue, critique, and new perspectives.

- The value of global collaboration – Knowledge expands when it is tested across cultures, disciplines, and borders.

- The inspiration of place – Institutions like Oxford remind us of the timeless pursuit of truth and learning.

- The importance of purpose – Staying true to our values and goals enables us to overcome challenges and recognise our achievements.

These reflections were sharpened by what students told us in the survey. One mature student, juggling studies with full-time work, said: “AI is like a crutch. It helps me walk further, but I don’t want to forget how to walk on my own.”

Where Dreams and Research Converge

Standing at Oxford, presenting research that bridges technology, ethics, and wellbeing, I was reminded of the unexpected ways in which dreams unfold. My journey may not have followed a traditional Oxford route, but it led me to a moment where personal aspiration, academic discovery, and global dialogue converged.

Ultimately, it is the students’ voices that remind me why this research matters. Behind every dataset is a human story — of stress, of hope, of striving to learn in a digital age. Oxford gave me the stage to share these findings, but it is the words of students that continue to echo:

- “I want AI to be a tool, not a trap.”

And that, I believe, is the challenge for higher education moving forward.

At LSST, we strive to create such moments where research meets inspiration, and scholarship intersects with global exchange. The Oxford conference was not only a milestone in my career but also a reminder that education, at its best, is both timeless and urgently relevant.

As I left Oxford’s historic courtyards, I carried with me not only gratitude but also a renewed conviction: that even in an age of artificial intelligence, it is our humanity – our ethics, our wellbeing, and our dreams – that must remain at the centre of learning.

Learn more at: https://www.icmetl.org/august-2025